Theme: Use your Power9 IBM Power resources for AI data processing tasks

By now, most have heard the saying:

“If you are not using AI, you are behind.“

For companies using IBM Power, especially Power9, which is commonplace throughout the industry, here is an idea of how you might use your existing or easily accessible cloud-based Power resources to help you jumpstart your journey to AI.

“Enterprise Journey to AI, use what you have when starting out…”

There are two broad classifications of AI regarding who consumes it. Consumer AI and Enterprise AI. Consumer AI aids individuals. Everyone has heard about ChatGPT. Enterprise AI serves organizations by automating business processes and analyzing large, complex data sets, requiring a scalable architecture and seamless integration with existing systems. For this article, let’s focus on Enterprise AI.

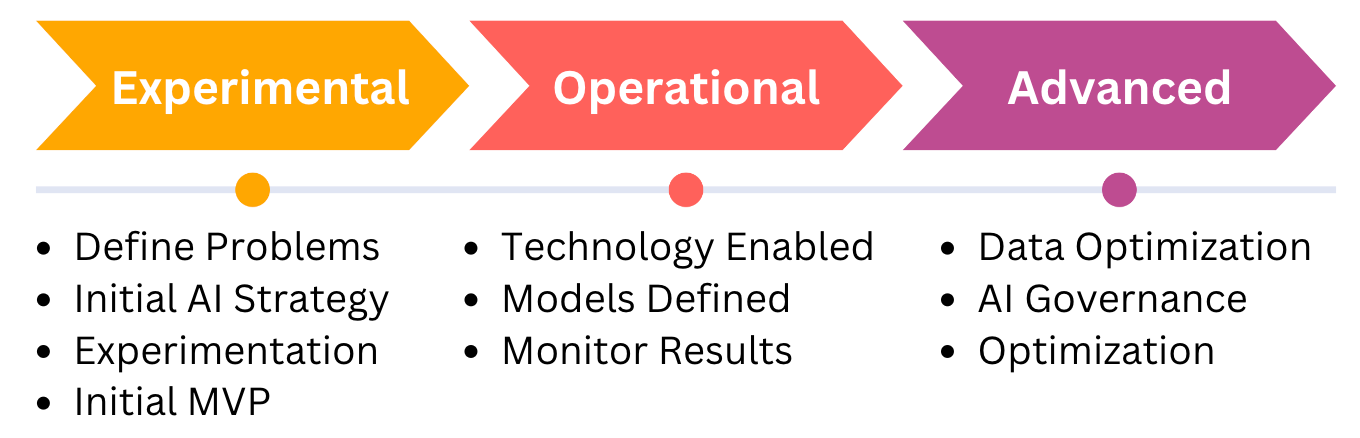

The journey to AI for the enterprise will travel through three stages:

Even though there is an abundance of AI success stories, many organizations are just starting out on their AI journey. Let’s focus on just one idea in the Experimental stage for those organizations starting their path to AI.

We’ve heard that Nvidia GPUs (based on video card integrated circuits) provide faster processing than traditional compute architectures in the typical enterprise. These GPUs are expensive to buy and must be done at scale to be helpful, and they are costly to rent or access as a cloud service.

This cost might not be appropriate for the Experimental stage. During the Experimental stage, many of your AI activities might be closer to “advanced analytics” rather than parallel processing and advanced matrix mathematics, usually done by GPUs when large datasets are involved. These more complex operations might be attempted during the Operational and Advanced stages of your AI journey.

What are economical alternatives to using expensive high-end hardware for AI processing during your Experimental stage? Specifically, what types of AI-related processing can be done using your existing accessible computing resources?

For companies using IBM Power either on-prem or in the cloud, there is an interesting path to high-performing AI activities appropriate for the Experimental stage and beyond.

During the Experimental stage, the high-performing RISC processors in IBM Power servers offer an intermediate step between typical Enterprise class Intel x86 servers and high-end processors. If you already use IBM Power, then there are several advantages:

1) You already own it or have a cloud subscription to it.

2) You can run either AIX(Unix) or Linux using open-source tooling.

3) You are already familiar with the Power platform.

4) For many AI use cases, you might get better performance than x86.

Here are some points specific to Skytap on Azure running IBM Power, but the same ideas apply to any cloud provider offering IBM Power as a Service:

If you are running Power in the Cloud, such as Skytap on Azure, then you have additional benefits:

a) To economize spend, you can use built-in schedulers to shut off all the provisioned servers during off hours. Compute costs only accumulate while the server is “running.”

b) A REST API can drive the entire Skytap platform. With an API, you can treat the Power AI workload similarly to what is commonly called “spot instances.” You can create the Power instance on the fly via automation, transfer data to the server, launch your AI task, extract the result, and delete the entire instance when finished. This minimizes costs.

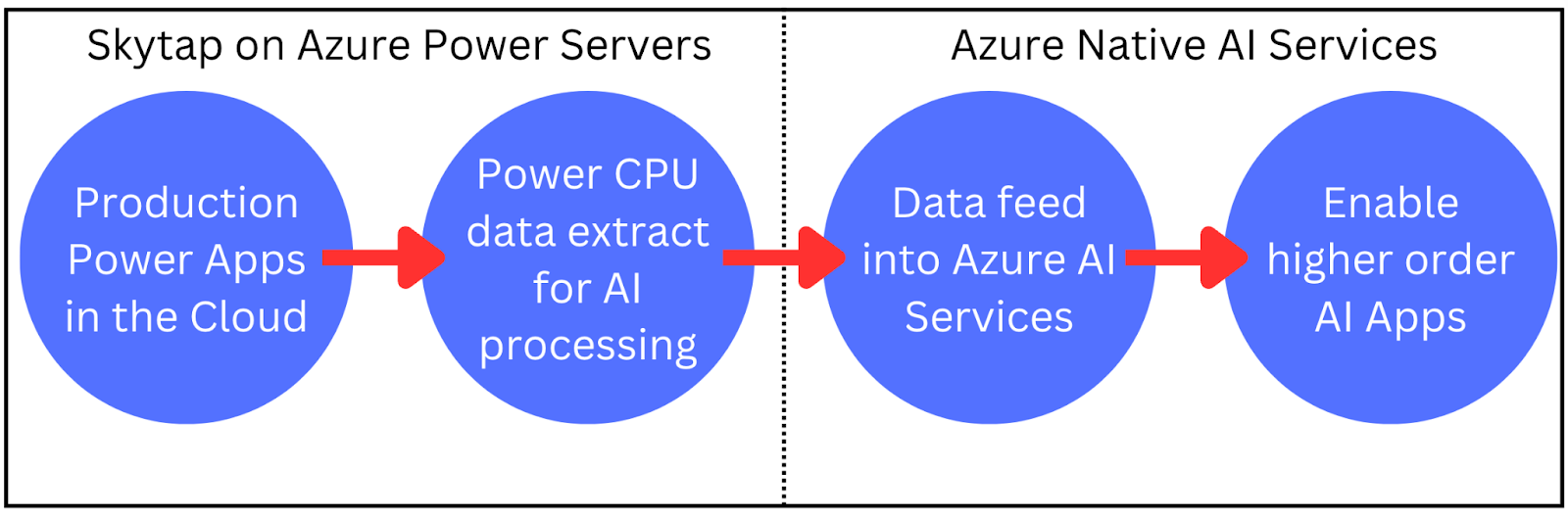

c) You can create an integrated high-speed network connection from Skytap on Azure running Power to other Azure AI services. This AI pipeline allows you to leverage familiar and economical processing using IBM Power and then pass the outputs into other high-order AI services running natively in Azure.

d) The operation of the Power server instance in Skytap closely matches the on-prem experience, so no additional specialized skill sets are needed.

You could already have access to a powerful AI processing platform; it’s your existing IBM Power resources!

If your on-prem Power resources are near maximum capacity, you can extend your existing Power infrastructure into the Cloud as your beginning and intermediate step toward advanced AI. In the diagram above, production IBM Power applications are already running in Skytap on Azure. IBM Power exists inside the Azure Regional Data Centers.

What’s an example of AI data processing that might work well on IBM Power versus Intel x86?

There are many types of AI, including:

- Image, Video, Speech processing

- Natural Language Processing (NLP)

- Predictive Analysis

- Autonomous System (robots)

AI is nothing without data. Data processing activities that are part of the NLP AI workflow might be a great use of IBM Power in the cloud. NLP requires both intensive I/O and computational processing.

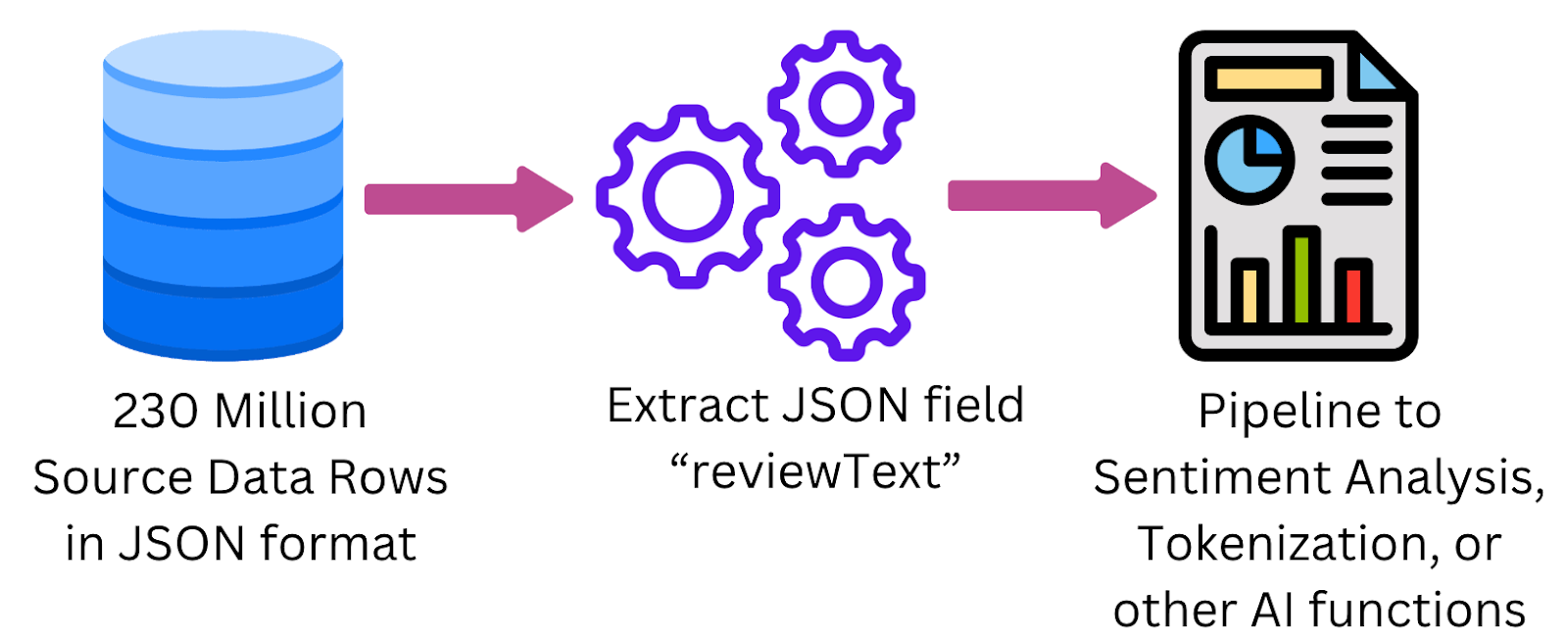

Here is a generic example to consider. It compares processing ~232,000,000 rows of JSON data from Amazon product reviews. This type of activity would be a common precursor to many AI workflows, where you must first isolate your target data set before you begin AI model creation.

We compared a modest IBM Power9 server (LPAR) to an XEON 2.67 Ghz x86 Intel server. The Power server had one full vCPU assigned to it. Both servers had 32GB of memory and a similar SSD storage allotment to hold the Amazon review data locally.

We intentionally compared Power9 instead of Power10 since Power9 is found in many companies today. Similarly, we picked a x86 configuration representing a standard enterprise server. Remember, the goal is to use what you already have “in-house” during your AI Experimental phase instead of purchasing or renting high-end AI hardware. In addition, Power9, via a cloud-based service like Skytap on Azure, might be an economical alternative to high-end AI computing resources.

The test:

We created a C program to perform the JSON data traversal operation. We compiled the C program natively on IBM Power9 running Linux and on x86 running Linux. The code reads each of the 232 million JSON rows and, for each row, extracts that text for the field called “reviewerText,” which is one or more sentences. In a more complex AI pipeline, the extracted text might then be passed to a sentiment analysis engine to score the text from positive to negative. Another AI pipeline destination would be tokenization, where the individual words are parsed and converted into numerical vectors, which can feed generative AI models similar to ChatGPT.

One row of sample data might look like this:

{“overall”: 1.0, “verified”: true, “reviewerID”: “A2PR1HM2173XW0”, “asin”: “B017O9P72A”, “reviewText”: “The coffee machine stopped working after one week. I was making three pots per day which I consider normal use.”, “summary”: “Not durable”, “unixReviewTime”: 1488240000}

The results show the time it took to process the 230 million JSON rows on an IBM Power LPAR and a typical Enterprise class Intel-based server.

Results:

x86 2.67 GHz, Intel Xeon, 32 gig memory

Time to read and process 232,901,943 JSON records:

683 seconds

Power9, 1 vCPU LPAR, (EC=1), 32 gig memory

Time to read and process 232,901,943 JSON records:

387 seconds

That’s a 55% difference between completion times. The IBM Power instance outperformed x86.

Though all benchmarks like this are subjective, it hints at the potential of using IBM Power servers as the initial foundation of your AI journey. If you are in the “Experimental” stage, consider using IBM Power on-prem or in the Cloud with a service like Skytap on Azure. Using IBM Power allows you to work with familiar resources. You might even accelerate your AI journey from Experimental to fully Operational.